Software testing is an art.Good testing also requires a tester's creativity, experience and intuition, along with proper techniques. Testing is more than just debugging. Testing is not only used to locate defects and correct them, but also used in validation, verification process, and reliability measurement.

Testing is expensive. Automation is a good way to cut down cost and time. Testing efficiency and effectiveness is the criteria for coverage-based testing techniques. Complete testing is not possible. Complexity is the root of the problem. At some point, software testing has to be stopped and product has to be shipped. The stopping time can be decided by the trade-off of time and budget. Or if the reliability estimate of the software product meets requirement.

Testing may not be the most effective method to improve software quality. Alternative methods, such as inspection, and clean-room engineering, may be even better.

1.Black Box Testing is a software testing method in which the internal structure/design/implementation of the item being tested is not known to the tester. These tests can be functional or non-functional, though usually functional. Test design techniques include:

- Equivalence partitioning

- Boundary Value Analysis

- Cause Effect Graphing

2. White Box Testing is a software testing method in which the internal structure/design/implementation of the item being tested is known to the tester. Test design techniques include:

- Control flow testing

- Data flow testing

- Branch testing

- Path testing

- Unit testing : Testing of individual software components or modules. Typically done by the programmer and not by testers, as it requires detailed knowledge of the internal program design and code.This may require developing test driver modules or test harnesses.

- Incremental integration testing : Bottom up approach for testing i.e continuous testing of an application as new functionality is added. Application functionality and modules should be independent enough to test separately. Done by programmers or by testers.

- Integration testing : Testing of integrated modules to verify combined functionality after integration. Modules are typically code modules, individual applications, client and server applications on a network, etc. This type of testing is especially relevant to client/server and distributed systems.

- Functional testing : This type of testing ignores the internal parts and focus on the output is as per requirement or not. Black-box type testing geared to functional requirements of an application.

- System testing : Entire system is tested as per the requirements. Black-box type testing that is based on overall requirements specifications, covers all combined parts of a system.

- End-to-end testing : Similar to system testing, involves testing of a complete application environment in a situation that mimics real-world use, such as interacting with a database, using network communications, or interacting with other hardware, applications, or systems if appropriate.

- Sanity testing : Testing to determine if a new software version is performing well enough to accept it for a major testing effort. If application is crashing for initial use then system is not stable enough for further testing and build or application is assigned to fix.

- Regression testing : Testing the application as a whole for the modification in any module or functionality. Difficult to cover all the system in regression testing so typically automation tools are used for these testing types.

- Acceptance testing : Normally this type of testing is done to verify if system meets the customer specified requirements. User or customer do this testing to determine whether to accept application.

- Load testing : Its a performance testing to check system behavior under load. Testing an application under heavy loads, such as testing of a web site under a range of loads to determine at what point the system's response time degrades or fails.

- Stress testing : System is stressed beyond its specifications to check how and when it fails. Performed under heavy load like putting large number beyond storage capacity, complex database queries, continuous input to system or database load.

- Performance testing : Term often used interchangeably with 'stress' and 'load' testing. To check whether system meets performance requirements. Used different performance and load tools to do this.

- Usability testing : User-friendliness check. Application flow is tested, Can new user understand the application easily, Proper help documented whenever user stuck at any point. Basically system navigation is checked in this testing.

- Install/uninstall testing : Tested for full, partial, or upgrade install/uninstall processes on different operating systems under different hardware, software environment.

- Recovery testing : Testing how well a system recovers from crashes, hardware failures, or other catastrophic problems.

- Security testing : Can system be penetrated by any hacking way. Testing how well the system protects against unauthorized internal or external access. Checked if system, database is safe from external attacks.

- Compatibility testing : Testing how well software performs in a particular hardware/software/operating system/network environment and different combinations of above.

- Comparison testing : Comparison of product strengths and weaknesses with previous versions or other similar products.

- Alpha testing : In house virtual user environment can be created for this type of testing. Testing is done at the end of development.Still minor design changes may be made as a result of such testing.

- Beta testing : Testing typically done by end-users or others. Final testing before releasing application for commercial purpose.

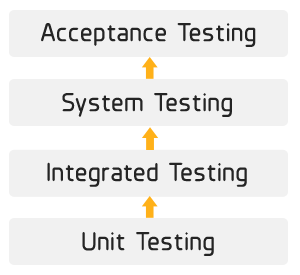

- Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. The purpose is to validate that each unit of the software performs as designed.

- Integration Testing is a level of the software testing process where individual units are combined and tested as a group. The purpose of this level of testing is to expose faults in the interaction between integrated units.

- System Testing is a level of the software testing process where a complete, integrated system/software is tested. The purpose of this test is to evaluate the system's compliance with the specified requirements.

- Acceptance Testing is a level of the software testing process where a system is tested for acceptability. The purpose of this test is to evaluate the system's compliance with the business requirements and assess whether it is acceptable for delivery.

TEST PLAN

A Software Test Plan is a document describing the testing scope and activities. It is the basis for formally testing any software/product in a project.

TEST PLAN TYPES

One can have the following types of test plans:

- Master Test Plan : A single high-level test plan for a project/product that unifies all other test plans.

- Testing Level Specific Test Plans : Plans for each level of testing.

- Unit Test Plan

- Integration Test Plan

- System Test Plan

- Acceptance Test Plan

- Testing Type Specific Test Plans : Plans for major types of testing like Performance Test Plan and Security Test Plan.

TEST PLAN TEMPLATE

The format and content of a software test plan vary depending on the processes, standards, and test management tools being implemented. Nevertheless, the following format, which is based on IEEE standard for software test documentation, provides a summary of what a test plan can/should contain.

Test Plan Identifier :

- Provide a unique identifier for the document. (Adhere to the Configuration Management System if you have one.)

Introduction :

- Provide an overview of the test plan.

- Specify the goals/objectives.

- Specify any constraints.

References :

- List the related documents, with links to them if available, including the following:

- Project Plan

- Configuration Management Plan

Test Items :

- List the test items (software/products) and their versions.

Features to be Tested :

- List the features of the software/product to be tested.

- Provide references to the Requirements and/or Design specifications of the features to be tested

Features Not to Be Tested :

- List the features of the software/product which will not be tested.

- Specify the reasons these features won't be tested

Approach :

- Mention the overall approach to testing.

- Specify the testing levels [if it's a Master Test Plan], the testing types, and the testing methods [Manual/Automated; White Box/Black Box/Gray Box]

Item Pass/Fail Criteria :

- Specify the criteria that will be used to determine whether each test item (software/product) has passed or failed testing.

Suspension Criteria and Resumption Requirements:

- Specify criteria to be used to suspend the testing activity.

- Specify testing activities which must be redone when testing is resumed.

Test Deliverables :

- List test deliverables, and links to them if available, including the following:

- Test Plan (this document itself)

- Test Cases

- Test Scripts

- Defect/Enhancement Logs

- Test Reports

Test Environment :

- Specify the properties of test environment: hardware, software, network etc.

- List any testing or related tools.

Estimate :

- Provide a summary of test estimates (cost or effort) and/or provide a link to the detailed estimation.

Schedule :

- Provide a summary of the schedule, specifying key test milestones, and/or provide a link to the detailed schedule.

Staffing and Training Needs :

- Specify staffing needs by role and required skills.

- Identify training that is necessary to provide those skills, if not already acquired.

Responsibilities :

- List the responsibilities of each team/role/individual.

Risks :

- List the risks that have been identified.

- Specify the mitigation plan and the contingency plan for each risk.

Assumptions and Dependencies :

- List the assumptions that have been made during the preparation of this plan.

- List the dependencies.

Approvals :

- Specify the names and roles of all persons who must approve the plan.

- Provide space for signatures and dates. (If the document is to be printed.)